Artificial intelligence : new friend or new foe of cybersecurity ?

Artificial Intelligence (AI) is a field of computer science that aims to create machines capable of operating by themselves and performing tasks that normally require human intelligence. The launch of OpenAI’s ChatGPT (Generative Pre-trained Transformer) conversational robot at the end of 2022 has left a lasting impression. This was a major technological breakthrough, as it was the first to be made available to the general public, and an excellent example of what artificial intelligence can look like. Generally speaking, AI involves the development of computer programs capable of learning, reasoning, planning, perceiving, communicating and making decisions autonomously. These breakthroughs are revolutionizing cybersecurity. AI now makes it possible to detect cyber threats faster and more efficiently. Moreover, it is also providing more advanced capabilities than traditional methods, particularly when it comes to threat prevention and response. While AI is a particularly powerful new tool, it also raises a number of questions in terms of data security. As there’s a flip side to every coin, it’s worth looking at the pros and cons of AI for cybersecurity.

Machine learning algorithms now play an even greater role in information systems security. They are now capable of analyzing massive amounts of data, in real time, with the aim of identifying patterns of suspicious behavior and detecting malicious activities before they can cause any serious damage. AI can help anticipate attacks before they happen, by constantly monitoring networks and looking for potential vulnerabilities. These systems can also be used to reinforce password security or detect phishing attempts.

Machine learning is a sub-discipline of AI that uses a variety of techniques to enable a computer to train itself on data and learn to perform specific tasks. AI can help save a considerable amount of time by automating certain security tasks, reducing the workload for security teams and improving process efficiency. AI systems can be used to monitor server and application activity logs, triage security alerts and perform risk analysis.

Ultimately, AI can also improve responses to security incidents. It may be able to provide precise information on vulnerabilities, and assist security and safety teams in their decisions on how to respond to an incident. Artificial intelligence analysis of a vulnerability or incident offers the opportunity to better understand why and how an attack may have been possible.

It is important to note, however, that AI can also present risks, of varying degrees of severity, in terms of information systems security. First of all, hackers can also use AI to carry out more sophisticated, and therefore more violent, attacks. Some cyber-attackers are particularly skilled at using AI compromising techniques. There are three main situations in which hackers may use such techniques:

- they can test their software’s ability to penetrate an AI by simulating attacks in order to analyze defenses;

- they can map AI patterns to develop more complex hacking strategies;

- they seek to fool an AI by poisoning it with false data (adversarial machine learning) to make the AI believe that the attack behaviors are not malicious.

If you’d like to find out more, read Techtarget’s article ‘How hackers use AI and machine learning to targer entreprises‘.

In addition, hackers sometimes use machine learning techniques. These offer advanced capabilities that can be exploited to enhance the sophistication of cyberattacks. Hackers can then use enhanced phishing attacks (using machine learning algorithms to generate more sophisticated phishing emails that are closer to the communication habits of legitimate senders) or personalized malware (using machine learning to analyze the behavior of targeted users and security vulnerabilities). Hackers can also use machine learning to detect and avoid security systems – whose models and behavior they have analyzed in advance (firewalls, intrusion detection systems, etc.). The objective remains the same: to establish an index of vulnerabilities and use it to develop more sophisticated and intrusive attacks.

Some hackers even go so far as to modify code, enabling them to remotely manipulate a security system based on artificial intelligence. The aim may be to authorize access that is normally restricted, such as to confidential data, with serious consequences.

More broadly, the use of AI in cybersecurity can also present risks for privacy and human rights. Indeed, some artificial intelligence systems collect massive amounts of personal data. This data is then used to create user profiles (known as profiling) and target them with personalized advertising or phishing messages. To illustrate the risks associated with the processing of personal data by artificial intelligence, ChatGPT is a particularly eloquent example. Its release has raised the question of data protection and compliance with European regulations. Indeed, exchanges between the user and the conversational robot are collected by OpenAI. Questions arose regarding the user’s right to rectify data, as required by the European Union’s General Data Protection Regulation (GDPR).

In early 2023, Italy had blocked access to ChatGPT fearing mismanagement of its citizens’ personal data. In April 2023, the French ‘Commission Nationale de l’Information et des Libertés’ (CNIL) decided to open a control procedure relating to possible breaches of the regulations currently in application.Spain also announced the opening of an investigation. For its part, the EU is closely monitoring the case through its Data Protection Committee (EDPS). In early May 2023, following the improvements made by OpenAI, in terms of data protection and RGPD compliance, Italian authorities restored access to ChatGPT.

It’s worth noting that some conversational software, such as ChatGPT, has also been reported to offer inappropriate responses. Most AIs are programmed not to respond to pornographic or terrorist requests, for example. However, it has been demonstrated that by manipulating the AI through subtle, devious questions, it can ultimately provide an answer it wasn’t intended to provide in the first place.

The example of the chemist is instructive: if you ask an AI what products are needed to create a homemade bomb, the AI won’t provide you with the list, let alone the instructions. On the other hand, if you pretend to be a chemist, provide the AI with some knowledge of chemicals and make it believe that this is a scientific experiment, it’s possible that the AI will give you tips on which products to mix to create an explosion. This kind of drift has been demonstrated and underlines the ease with which an AI can be hijacked.

The same applies to AI image generators. These are educated from so-called training data. Once they have been put into circulation, their databases are continually fed with images from the Internet. As a rule, image generators do not create horror images that could shock the user. Nevertheless, by combining the words “red lake” and “lying bodies”, the image created can easily resemble lifeless bodies in a blood pool. This is further evidence of the derivative use that can be made of pre-configured AIs.

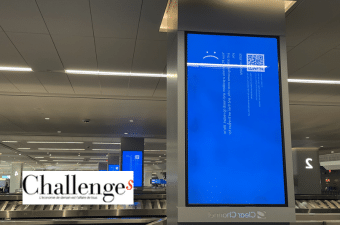

Lastly, like any IT tool, AI cannot be considered 100% reliable. In some cases, AI can be the source of security flaws that can lead to data leaks, or even an invasion of users’ privacy. Bing Chat, for example, which is the latest version of ChatGPT(4) integrated into the BING search engine, refuses to provide information on its configuration parameters. However, some users have used tricky questions to force the AI to reveal this information. But once revealed, the AI, which draws its information from the Internet, threatened to attack its detractors.

Furthermore, when it comes to data confidentiality, users of conversational robots are generally not very careful about the storage of their personal information. If OpenAI’s databases were to leak, every user’s conversations could be exposed, on the web or even on the darkweb. Once this data is out in the open, cybercriminals may seek to exploit it (personal, banking or medical information, to name just a few) and use it for malicious purposes.

While AI may offer many advantages for the future of cybersecurity, it can also present non-negligible risks for the security of information systems and privacy. It is essential to take all these risks into account when using AI. In particular, when it comes to protecting your IT data, and putting in place appropriate security measures to mitigate them. To address the threats that the use of AI may entail, the experts recommend to :

- implement additional security controls

- develop more robust and resilient artificial intelligence systems

- carefully monitor the use of AI in all cybersecurity operations.

However, it would be counterproductive to downplay the tremendous technological breakthrough represented by AI, which should considerably enhance information systems security in the future. AI makes it possible to develop protection strategies, detect vulnerabilities and anticipate possible cyber-attacks, in order to propose ways of strengthening the security of all your data and devices.

As a general rule, you should always seek the advice of cybersecurity experts if you want to strengthen your cybersecurity with artificial intelligence tools. If you have any doubts, please take a look at our solutions and contact us via our website ubcom.eu.